A case against logging

This is a repost of an article I published on the Realmac Software blog.

I don’t use logging. From the simple printf or NSLog to more complex logging frameworks such as CocoaLumberjack. In this post I will try to explain the reasoning behind it, from a development, testing and release perspective, and propose alternatives that are, in my opinion, much more powerful.

During development

A common use case for logging is to follow the flow while developing. So one would insert some NSLog statements throughout the code, mainly to figure out if some part of the code has been executed.

I have two problems with this approach.

First, it requires to build your project and run again. If you are working on a very small project it might be acceptable but it can become a large hit to your productivity if your project takes even only one minute to build. Also, some code path might only occur after the application has been running for some time and you probably don’t want to wait for it occur after each launch.

Second, once the message has been logged, that’s it, all the information you might have been after has been written to the console and there’s no more you can go after. You might be wondering about the state of other parts of the application but there is just no way you can find out about them since your logging statements were added at compile time and logging more things would require you to build your application again.

I have a very simple solution for both of these problems: use the debugger and use breakpoints.

A breakpoint can be added at any time during the execution of your program and does not require recompiling. The huge benefit of breakpoints is that when you broke in the debugger, it can give everything you might want to know about the current state of your program. It actually has a perfect, complete snapshot of your program at this very moment in time. Just be curious and dive into it. You might want to ask for the current value of some variables, ask for a backtrace of a current thread, step into some function, disassemble some parts of the framework, and much much more.

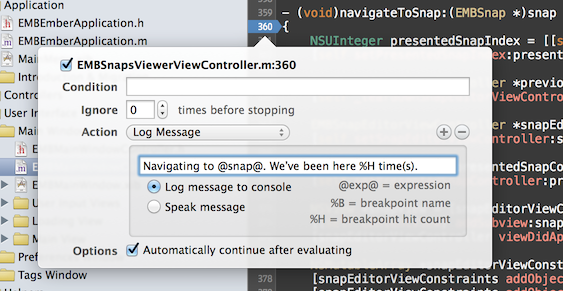

One of the critics I hear more often against breakpoints is: “but I don’t want to break!”. Well, no one said that you had to pause execution when a breakpoint is reached. In Xcode you can define the type of action your breakpoint should take and whether it should continue execution after evaluating. So if you’re into logging, you could have the action being logging a message and continue after evaluating. This gives you even more power than simple logging since you can decide to log a hit count for example, log only if a condition has been met, you name it. And remember, you don’t need to build the application again, you can add a breakpoint right in the middle of the execution of your program.

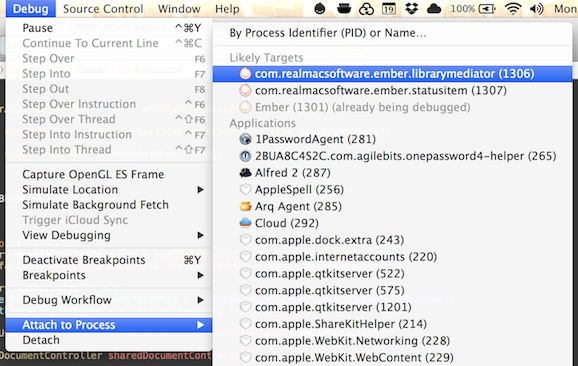

A situation where you could run into limitations with breakpoints is when you are debugging multiple programs simultaneously. Let’s take an example: you have an application that uses a helper application or an XPC service launched by the main application. Both the main and helper applications are in the same project and even share some code. When you run the main application, the helper application will be launched. Now see, only the main application will be running in the debugger and any breakpoint in portions of the code run by the helper application will not be hit. However, there is a solution for this. You can simply attach the debugger to your helper application, directly from within Xcode.

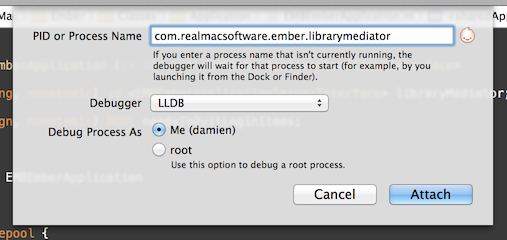

Once attached, your helper application will be running in the debugger too and your breakpoints will be hit, just like they do in the main application. If you need to break early in the lifetime of the helper application or just cannot wait for it to launch to attach to it, you can also specify a process ID or name and it will be attached as soon as it launches. You can set it up directly from Xcode, it only uses the LLDB process attach --waitfor though.

During testing

Another area where logging is particularly unhelpful is during testing. During testing, you want your bugs to be as obvious as possible so that you can catch them early and not ship them in your product.

I have a solution for this: assertions! Wherever I expect a variable or a method return to have a particular value I tend to assert it with NSParameterAssert (or NSCParameterAssert when inside a function). It is important to know that NSParameterAssert throws a NSInternalInconsistencyException Objective-C exception when the assert fails. Thus, make sure that Objective-C exceptions are enabled for each configuration you need them in your build settings. We have them enabled in every configuration, including the App Store release configuration, for reasons that will become clearer in the next paragraph.

Second, by default a Cocoa application doesn’t crash in case of an uncaught exception. While this might sound like a good thing, it really isn’t since it simply logs the exception and backtrace to the console and silently fails. You can set NSApplicationCrashOnExceptions to YES in the user defaults and have your application to cleanly crash when an uncaught exception happens. Again, we have this turned on in every configuration, including the App Store release one.

With all this set up, your application should nicely crash and a crash report will be generated every time your tester encounters some situation where the application is not behaving as it should. Trust me, this is invaluable. A good crash log is far more useful than a thousand lines of console logs since it gives you a perfect snapshot of what went wrong at the exact moment when your application crashed.

Relying on logging during testing means a tester should really always keep the Console open in order to catch issues that might just silently fail and log. I might sound like a broken record but a clean crash is a very clear hint that something went wrong and it just saves both you and your tester’s time, by not having to peak through hundred of lines of log to find oddities.

Once the product has been released

This is, in my opinion, the most interesting part. To start with, I’d like to discuss something that might be a bit controversial: you should never assume your customers to be technical. Asking a user to copy and paste logs from the Console, launch your application with a special keyboard shortcut to enable logging mode, modifying some plist somewhere ~/Library, etc… should never be an option. In Cocoa, we have much better tools to report problems to users and we should really use them rather than relying on technologies such as logging that should never be applicable outside of a command line environment.

This said, what you should do when you encounter problems in your released application? First of all, use NSError. Rather than log an error, create an instance of NSError and return it to the caller, often by mean of a error by-ref parameter in the method. By doing this, the caller is in charge of inspecting the error if the method didn’t return the expected result and can either attempt some recovery option or have it bubble up to the interface where it can be presented to the user. Error presentation can be anything from an alert to an inline text in the current view. The key concept is that the error is presented exactly where the problem happened, making it obvious to the user that something went wrong and explaining the problem.

As in every piece of software, even after thorough testing, some unexpected behavior might still occur. As I stated above, in such cases a clean crash is far more appropriate than a silent failure generating a log while potentially leaving the application in a state that might damage user data.

Therefore, you should avoid silent failures at all cost. By using assertions in place of logging, by enabling exceptions in release configurations and by crashing on exceptions you can ensure that your application will cleanly crash in case something unexpected happens. And you will receive a crash report. Obviously, all this goes in hand with thorough testing. After all you want to minimize the number of crashes for your users.